Procedural Assets with DX11

A typical triple A console game takes around 18 months to create and has a team of 80 artists. This means they have the luxury of having every asset lovingly crafted and placed in the world. Smaller developers do not have this luxury and so being able to create assets automagically using procedural generation is appealing.

Another reason why procedural generation might be interesting is that load times on consoles are not getting any shorter and nothing kills fun like loading screens. It should be obvious that generating a city from a single 32bit number will be quicker than loading 400MiB of assets from a Blu-ray drive. This is particularly relevant when it comes to downloadable content and mobile platforms.

As a final personal motivation, I wanted to spend my time learning DX11 and making stuff that looks nice, rather than spending ages writing a file importer for a modelling package. :-)

Another reason why procedural generation might be interesting is that load times on consoles are not getting any shorter and nothing kills fun like loading screens. It should be obvious that generating a city from a single 32bit number will be quicker than loading 400MiB of assets from a Blu-ray drive. This is particularly relevant when it comes to downloadable content and mobile platforms.

As a final personal motivation, I wanted to spend my time learning DX11 and making stuff that looks nice, rather than spending ages writing a file importer for a modelling package. :-)

What are Procedural Assets?

A great example of a procedural asset is a landscape based on a fractal image. We generate a grey scale image using a mathematical function. If we then convert the brightness of each pixel into a height value and render a 3D mesh we get something that can look remarkably realistic. Once we have created this landscape we can populate it with trees, cities etc created using simple rules. For instance a certain type of pine tree might only grow above 2000 feet on south facing slopes no steeper than 45 degrees.

Rather than go into a lot of detail on how fractals and such work I suggest you go take a look at Ken Perlin's website. Mr. Perlin is a great speaker and if you ever get the chance to hear him speak you should take it.

Rather than go into a lot of detail on how fractals and such work I suggest you go take a look at Ken Perlin's website. Mr. Perlin is a great speaker and if you ever get the chance to hear him speak you should take it.

Fractal Landscapes with Terragen Classic

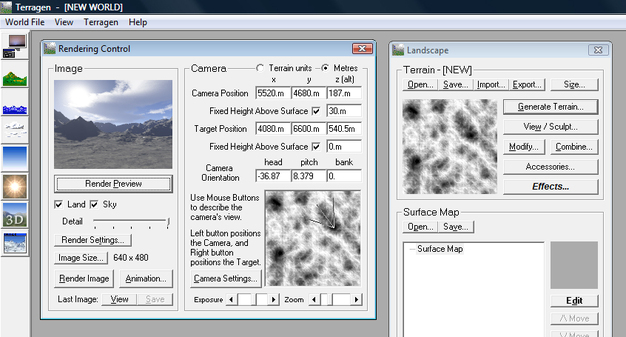

We could generate a landscape using a fractal algorithm in our game but initially I wanted to use a terrain generator to generate some nice looking content. There are many packages available for doing this and they are often free or inexpensive for personal use.

I have used Bryce and TerrainBuilder but one of my favorites is Terragen.

This may seem like cheating but we get the advantage of using a sophisticated tool along with the bandwidth reduction of only loading a simple height field at run time, rather than a large mesh with normals, texture coordinates etc. Note that it is also possible to combine a height field with other data, so for instance if we needed to have a flat area where a race track or city might exist, we could generate a bitmap to represent this and blend it with the data from Terragen.

I have used Bryce and TerrainBuilder but one of my favorites is Terragen.

This may seem like cheating but we get the advantage of using a sophisticated tool along with the bandwidth reduction of only loading a simple height field at run time, rather than a large mesh with normals, texture coordinates etc. Note that it is also possible to combine a height field with other data, so for instance if we needed to have a flat area where a race track or city might exist, we could generate a bitmap to represent this and blend it with the data from Terragen.

Generating Data to Render

To render a hieght field, we need to supply some parameters for the distance between sample heights in both the XZ plane and also in the Y direction.

Given this information generating the vertex data is actually pretty simple. Normals needed for lighting require a little more work but nothing too complex. For each vertex, get the surface normal of the surrounding triangles and then average these to generate the vertex normal. The texture coordinates are just linear and run from 0.0f to 1.0f, as they will be scaled in the shader.

Given this information generating the vertex data is actually pretty simple. Normals needed for lighting require a little more work but nothing too complex. For each vertex, get the surface normal of the surrounding triangles and then average these to generate the vertex normal. The texture coordinates are just linear and run from 0.0f to 1.0f, as they will be scaled in the shader.

Rendering the Data

There are many fun ways to shade a model like this. One of the traditional ways is to use a gradient ramp which starts off with blue, then sand, green, brown and finally white. This gives the blue ocean, sandy beaches, green forests, rocky slopes and snow capped peaks look, however I was hoping to achieve something a little more realistic.

I chose to use a simple lambertian reflection model with a point light. Landscapes aren't generally shiny and I wanted the lighting to match the blinn model for any objects I render in the scene.

To generate an interesting texture, I used 3 textures. One is a simple modulation map, that provides some difference in the surface, this might represent different types of soil or drainage patterns. Then there are 2 detail textures. The detail textures have to be tiling textures are they have to repeat over the surface of the map. One is for grass and the other for rock. To determine how much of each is used I take the Y component of the surface normal and use that as a blending factor. A completely flat plane will have values of 1 (100% grass) and a sheer cliff face will a value of 0 (100%).

Fragment Shader Code Extract

float blend = input.normal.y; //this ranges 0..1

float2 detailUV = input.uv*20.0f; //scale the texture coordinates so we get some detail

float3 detailTextureSample = shaderTextureDetail.Sample(SampleTypeDetail, (detailUV));

float3 rockTextureSample = shaderTextureRock.Sample(SampleTypeRock, (detailUV));

float3 aggregate = (detailTextureSample*blend)+(rockTextureSample*(1.0f-blend)); //blend rock and grass based on y component of normal

I chose to use a simple lambertian reflection model with a point light. Landscapes aren't generally shiny and I wanted the lighting to match the blinn model for any objects I render in the scene.

To generate an interesting texture, I used 3 textures. One is a simple modulation map, that provides some difference in the surface, this might represent different types of soil or drainage patterns. Then there are 2 detail textures. The detail textures have to be tiling textures are they have to repeat over the surface of the map. One is for grass and the other for rock. To determine how much of each is used I take the Y component of the surface normal and use that as a blending factor. A completely flat plane will have values of 1 (100% grass) and a sheer cliff face will a value of 0 (100%).

Fragment Shader Code Extract

float blend = input.normal.y; //this ranges 0..1

float2 detailUV = input.uv*20.0f; //scale the texture coordinates so we get some detail

float3 detailTextureSample = shaderTextureDetail.Sample(SampleTypeDetail, (detailUV));

float3 rockTextureSample = shaderTextureRock.Sample(SampleTypeRock, (detailUV));

float3 aggregate = (detailTextureSample*blend)+(rockTextureSample*(1.0f-blend)); //blend rock and grass based on y component of normal

Results

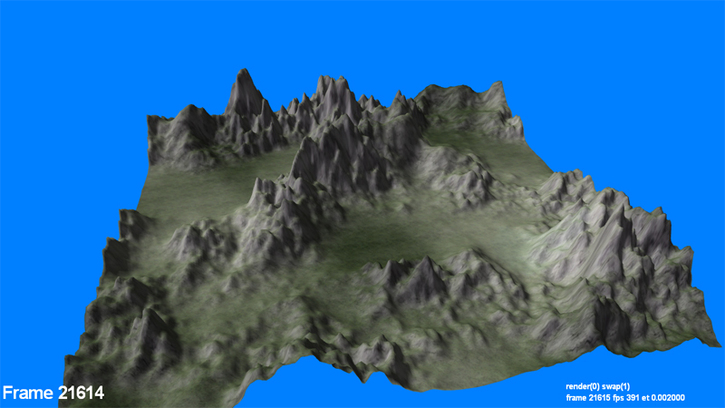

Here is a screen shot from the application running. In this example I have deliberately over scaled the height. For a 3D exploration game you probably wouldn't want your mountains to be too high, but maybe for a game like Scorched Earth, this might be appropriate.

For a days work I'm quite pleased with the results. Now I want to go explore the worlds I've created :-)

For a days work I'm quite pleased with the results. Now I want to go explore the worlds I've created :-)

Future Work

If you think about what we are doing here, it is possible to model erosion or have the landscape change in real time just by making changes to that height field.

If we wanted to render water, this can be done using a height field. We would simply need to update the input heights and normals every frame and then in the shader use the normals to generate a shiny reflective surface. Because of the nature of the processing required this would be a great opportunity to do some compute shader programming.

We could also add foliage and cities using rules like those mentioned earlier. Cities for instance could only exist in relatively flat areas of the map. I made an early PS3 sample that did this many years ago and the results were quite pleasing. One thing I am very interested in is the procedural generation of buildings so perhaps I will look at that next.

If we wanted to render water, this can be done using a height field. We would simply need to update the input heights and normals every frame and then in the shader use the normals to generate a shiny reflective surface. Because of the nature of the processing required this would be a great opportunity to do some compute shader programming.

We could also add foliage and cities using rules like those mentioned earlier. Cities for instance could only exist in relatively flat areas of the map. I made an early PS3 sample that did this many years ago and the results were quite pleasing. One thing I am very interested in is the procedural generation of buildings so perhaps I will look at that next.